Fall 2019 - Biologically encoded augmented reality (integrated cockpit)

Information floods the center of our visual field and often saturates the focus of our attention, yet there are parallel channels in the visual system constantly and unconsciously processing our environment. There is a dormant potential to activate these channels to challenge the limits of perception.

This demonstration will argue for the development and application of real-time, adaptive, perceptual skins, projected onto the observer’s immediate environment and computationally generated to target and stimulate key visual processing pathways. By activating biological processes responsible for edge detection, curve estimation, and image motion, I propose that these perceptual skins can change the observer’s proprioception as they move through dynamic environments.

This work represents a new intersection of the fields of vision science, computational imaging, and display technologies and has the potential to challenge the way we generate media for human consumption in active environments.

Click below to view project page:

Spring 2019 - Biologically encoded augmented reality

Our visual mechanisms constitute a set of powerful, networked input controls responsible for parsing and encoding the natural world before us. If we can interject parallel streams of information tailored to the subtle and sometimes preconscious mechanisms of vision, there is a potential to multiplex within our spatial and attentional bandwidth, to deliver more dynamic, context-rich experiences.

This research explores potentials in augmenting the perceived driving experience through the delivery of adaptive, context-aware stimuli. Presented to low-level visual mechanisms (i.e. low-mid threshold determinations, edge to noise, orientation, luminance, illuminance, central to peripheral motion reversal, translation manipulation via flicker), the stimuli are computationally generated to target several dimensions of an operator’s visual perception (i.e. acceleration/deceleration, speed, distance, and changes in vector trajectory).

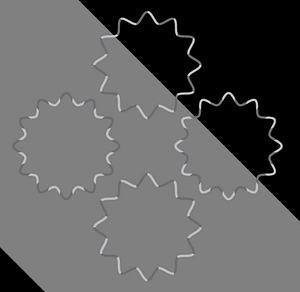

Fall 2018 - Synthetic shape motion

Our visual realities are flooded with complex and robust natural scenes. New findings in curvature estimation illustrate where our expectation of reality can break down under very simple mechanisms.

We present extensions of contrast polarity reversal curve distortion in two dimensions simultaneously:

1. projected onto complex geometries, and

2. modulated in time.

The alternating luminance values are mapped onto shapes that satisfy the “gentle curve” prerequisite while the reversal point is shifted in time. The alternating increasing and decreasing curvature distortions generate perceived shape motion. Removing a single prerequisite for the effect (i.e. changing the background luminance from 50% gray to black) deactivates the effect, revealing the static curvature. This presents new potentials in synthetic shape distortion through temporally modulated contrast opponency, creating perceptually fluid geometries from rigid forms. This demonstrates a use case for Takahashi’s obtuse corner shape dominance effect and an exploration of fluid perceptual shape motions as new perceptually kinetic objects.

Click below to view project page:

Spring 2018 - Atmopragmascope

We are surrounded by displays and technologies whose mechanisms are hidden from view. The atmopragmascope was an exploration of revealing the underlying mechanisms of not only how we generate and parse visual information but how it can be delivered. The design process and its outcomes are derivatives, in part, to the mechanics of the eye which guide our estimation of a “fair curve.” Working within the parameters of 19th-century tools and techniques, I adopted the perspective of research modalities relevant to a time in which the eye and low-level visual mechanisms for discerning thresholds, edges, shapes, etc, were the dominant tools in the creation of experimentation. Modern design and engineering tools, while enabling increasingly complex and sophisticated research, can also obfuscate the fundaments of form and function. By minimizing the influence of the technological aides we give our vision in the act of creation, the articulation of this machine intends to give form to the fundamental algorithms inherent in early biological visual processing.

Click below to view project page:

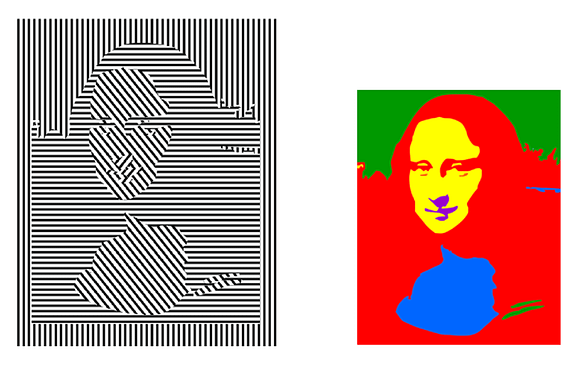

Fall 2016 - Perceptual programming: reconceptualizing peripheral vision for extended environments

Visual experience is a complex framework of perceptual interactions between motion and perception. As displays become larger, more immersive, and have the potential to extend beyond our central vision, we exploit several novel frameworks for visual illusion such as motion induced position shift and slow speed bias, allowing us to engage the entirety of our visual field. Peripheral vision is capable of detecting complex motion cues. We are now learning how to computationally generate adaptations that allow you to read – via perceived object motion – from the edge of your vision.

Using sensory plasticity as a medium to create new visual experiences allows us to challenge what we perceive as our extant realities.

The video below targets peripheral vision processes – please view in full screen to observe the strongest effect.

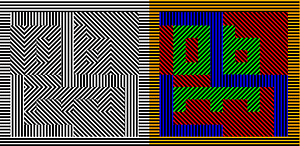

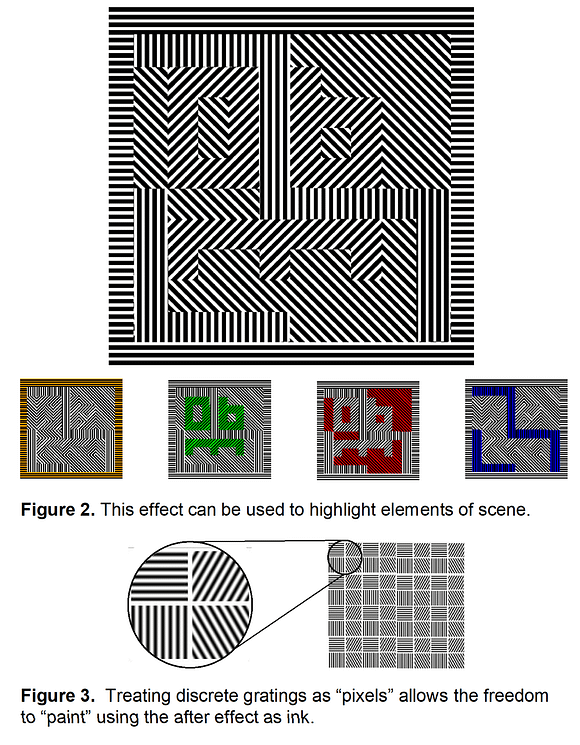

Spring 2016 - Invisible ink

The eye is a highly sensitive and programmable array of sensors. By presenting stimuli to discrete regions of the retina, we encode neural pathways with spatially modulated information to explore the many dimensions of our unique visual experience. Invisible ink builds upon the spatial frequency theory of vision to program the color adaption of edge-sensitive neurons, allowing us to manipulate our reception of a scene using only our visual system and its after-effects.

This demonstration is an orientaion-contingent color after effect. After a period of adaptation from viewing the video stimulus, any corresponding spatial frequencies take on new chromatic information. This phenomenon is one of many exciting potential building blocks that can be integrated into our daily experience.

Fall 2015 - Perceptual Masking

The following sequence presents a demonstration of the interlinkages of visual and auditory sensory processing. The video consists of a repeated, one-second clip of a grid of hues, each flickering at randomized distinct frequencies. We can think of these as pixels. Throughout the entire demonstration, the clip repeats each second, remaining unchanged. The only modulation occurs aurally. The shift in auditory chirp frequency allows perceptual alignment of patterns in hue and flicker to emerge.