Translating motion to meaning

This research expands upon prior work by presenting the following contributions:

- Translation of alphanumeric and abstract symbols into motion-modulated static aperture stimuli which:

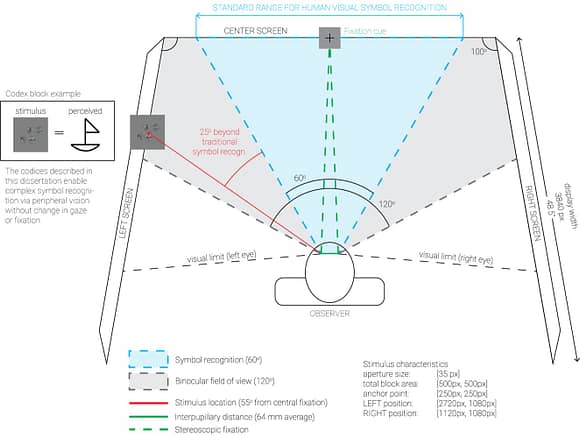

- are detectible with high accuracy rates at eccentricities greater than 50° from fixation

- occupy small regions of the visual field (less than 1 degree)

- are scalable to symbols containing multiple strokes for communicating increasingly complex semantic information

- Adaptation of visual stimuli for deployment in complex natural environments, such as from a pedestrian or automotive cockpit point of view.

The unique advantage of this approach is the compression of highly semantic visual information into small static apertures, overcoming cortical scaling to efficiently communicate characters and symbols to receptive fields at greater than 50° eccentricity.

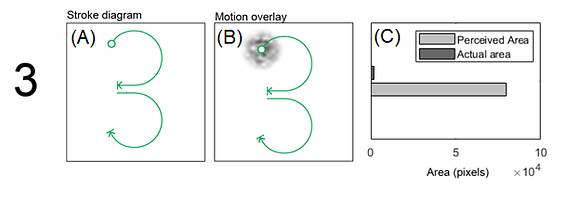

For the sake of simplification, we can think of any object static or unchanging in our periphery as invisible through habituation. Motion is the catalyst to perception in the discrete outer regions of the retina. A simple way to overcome imperceptibility of static forms in the far periphery is to apply a pattern consisting of Gaussian local motion to a character or symbol, such as Schäffel’s “Luminance Looming” effect. However, presenting symbols at increasing eccentricities in the visual field must account for cortical scaling, which at far peripheral locations beyond 40° would exceed a factor of 3. For the application of peripheral information delivery, this factor is highly impractical. To overcome cortical scaling, semantic forms are parsed into strokes and conveyed through motion-induced position shifts from small, static apertures. In the viewing environment developed for experimentation, aperture diameters were set at 35px, or 0.64 degrees of observer’s visual field. Utilizing multiple MIPS apertures for multi-stroke symbols, this approach can theoretically articulate almost any conceivable character or symbol in fractions of a degree of the visual field.

Codex block design

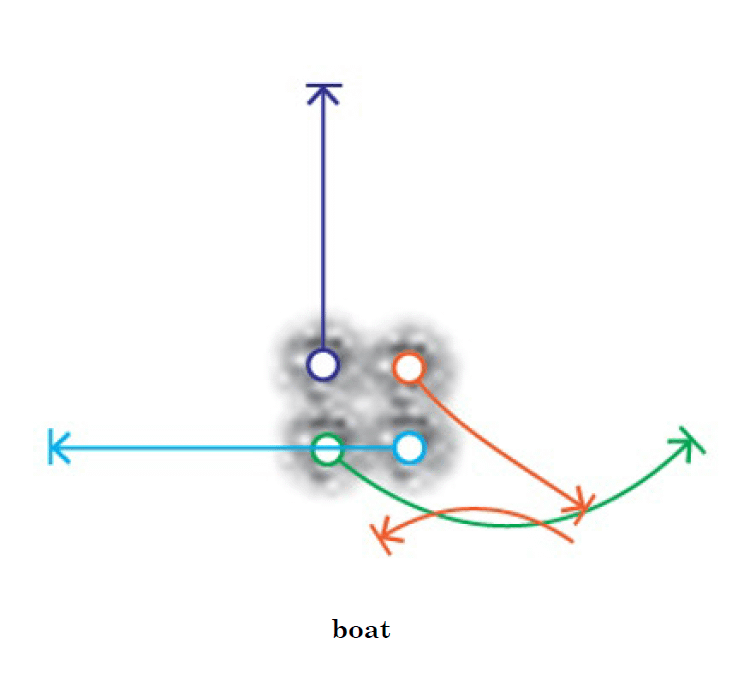

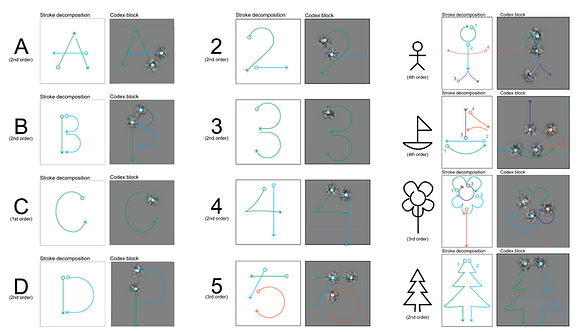

As a demonstration of first principles, I generated twelve unique symbols and transformed them into motion-modulated static aperture codex blocks. The characters: A, B, C, D, 2, 3, 4, 5, in addition to abstract *forms* such as boat, stick figure, flower, and tree. Each symbol was first parsed into its constituent stroke paths. An image of white gaussian noise was animated along the stroke path behind an aperture to generate perceived position shift. To make it easier to see, the strokes in the diagrams here are color coded according to order in sequence – 1st (green), 2nd (light blue), 3rd (dark blue) and 4th (orange). Note, sequential strokes with overlapping end-points and start-points are consolidated into one aperture (for example the second stroke of *B*).

In the case of the symbol *boat*, there immediately arises a need for expressing multi-stroke vector trajectories to communicate a complex symbol within a moment arc of perception. To accomplish this, I generated arrays of MIPS apertures, with the number of elements corresponding to the number of strokes necessary to impart minimum information for symbol recognition.

What is important to note here is the displacement of additive perceived shifts in relation to actual static structure. The most valuable asset of this approach is the close onset, or compression, of physical loci within a confined visual environment, in other words the space within an x-y coordinate plane or pixel pitch of digital display technologies. In the constructed study environment, each aperture spanned 0.64 degrees of the observer’s visual field. This is approximately equivalent to the size of your pinky fingernail when held at arms length.

Apertures were activated consecutively in time and, when inactive, presented static grain. Spatial arrangement of arrays ensured each aperture was positioned relative to the previous stroke. This becomes especially valuable in future application of the limited real estate of head mounted consumer technologies.

Read next...

Excerpts: stimulus preparation

Adapting stimuli to dynamic environments One of the greatest challenges of adapting motion-modulated semantics to real-world environments is maintaining requisite conditions for effects to occur.