Information floods the center of our visual field and often saturates the focus of our attention, yet there are parallel channels in the visual system constantly and unconsciously processing our environment. There is a dormant potential to activate these channels to challenge the limits of perception.

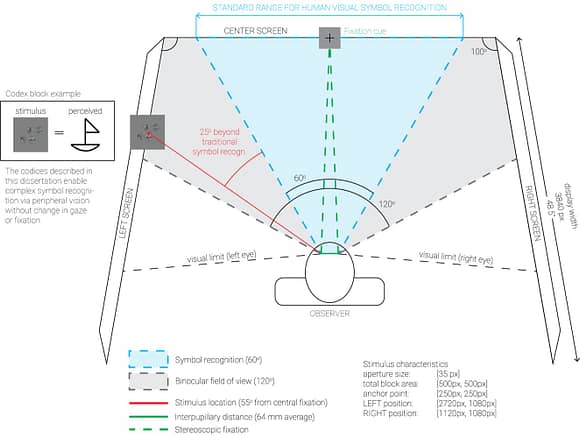

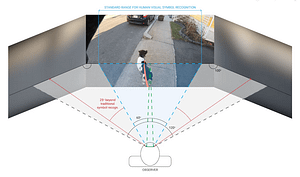

This demonstration argues for the development and application of real-time, adaptive, perceptual skins, projected onto the observer’s immediate environment and computationally generated to target and stimulate key visual processing pathways. By activating biological processes responsible for edge detection, curve estimation, and image motion, I propose that these perceptual skins can change the observer’s proprioception as they move through dynamic environments.

(un-mute for audio)

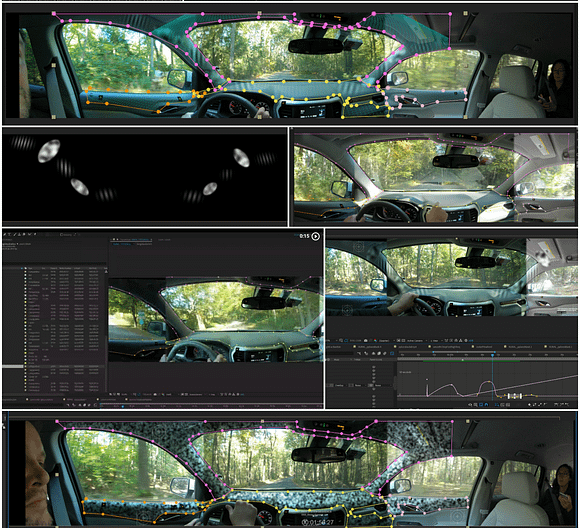

Stimulus projections to affect observer perception of self-motion through space time [live field test, central channel, raw]. Sequence 1: computationally generating sunlight on a rainy day. Sequence 2: gimbal correction for observer proprioception.

Data capture:

Evaluating adaptive cues in dynamic scenes requires a carefully controlled environment that is both parameterized and repeatable. The study environment includes three use cases: while walking, while driving in a rural environment, and while driving in a suburban environment. Source videos for the driving sequences were captured on three, 4K wide-angle cameras.

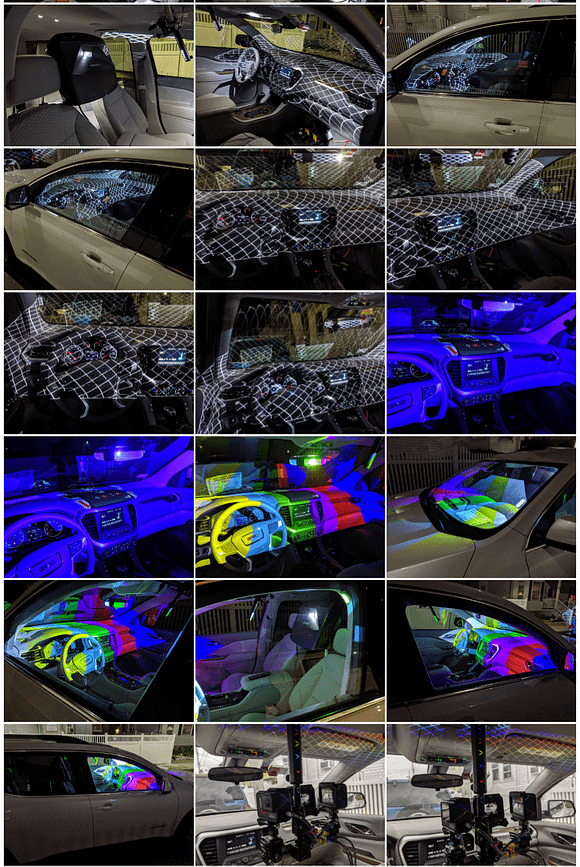

Environmental capture and stimulus projection (below): (a) sensor array, (b) projection system (c) interior topical mapping

Raw footage from natural environments was captured with a custom 12 DOF rig consisting of three GoPro HERO7 cameras, each recording 4K, 60 FPS with 69.5° vertical and 118.2° horizontal FOV and encoding using H.265 HEVC codec.

Processing:

Even with on-board image-stabilization, the raw videos contain significant jitter. Before capturing the videos, we placed registration marks in several locations within the interior of the vehicle and afterwards imported the raw videos into After Effects to track the motion of the marks and stabilize the footage. After stabilization, the three views were aligned using a corner pin effect and the results were rendered and encoded with the H.265 HEVC codec.

Once the footage is stabilized, the interior of the cockpit is isolated and used to mask the prepared stimuli.

Viewing environment architecture:

High-resolution LCD displays surrounded the observer: the center parallel to the observer’s coronal plane and the left and right displays abutting, at each seam forming a 100° angle. The observer was seated 31.5 inches from the center screen such that each pixel subtended 1.10 arcmin of the visual field with no restraining mechanisms to fix head or body motion. Three displays were mosaiced using an NVIDIA Quadro RTX 4000 graphics card, driver version 431.02.

Read next...

Excerpts: codex metrics, results in controlled environments

Metrics of symbol recognition speed and accuracy in a controlled environment, with codex parameterization using motion energy analysis

Excerpts: increasing environmental complexity, human subject trials

How detectable are far peripheral semantic cues in increasingly dynamic environments? Results show rapid adaptation and high detection accuracy.