Adapting stimuli to dynamic environments

One of the greatest challenges of adapting motion-modulated semantics to real-world environments is maintaining requisite conditions for effects to occur. Equiluminous kinetic edges are essential to induce perceived position shifts. The effect is somewhat tolerant to variance in luminance of the WGN patch when compared to the environment, but experiences a fall-off in magnitude with enough imbalance between them.

To preserve the perceived position-shift effects, white Gaussian noise is first rendered and prepared to be animated behind an aperture along a stroke path defined by the symbol vector geometries. The second stage of stimulus preparation is a process of adapting peripheral stimuli to parameters from dynamic natural scenes. The adapted stimulus is then overlaid onto the environment and presented to the observer.

Methodologies: chromatic adaptation

In studies implemented throughout this research, chromatic adaptation was the primary focus for preparing codex blocks for dynamic visual environments.

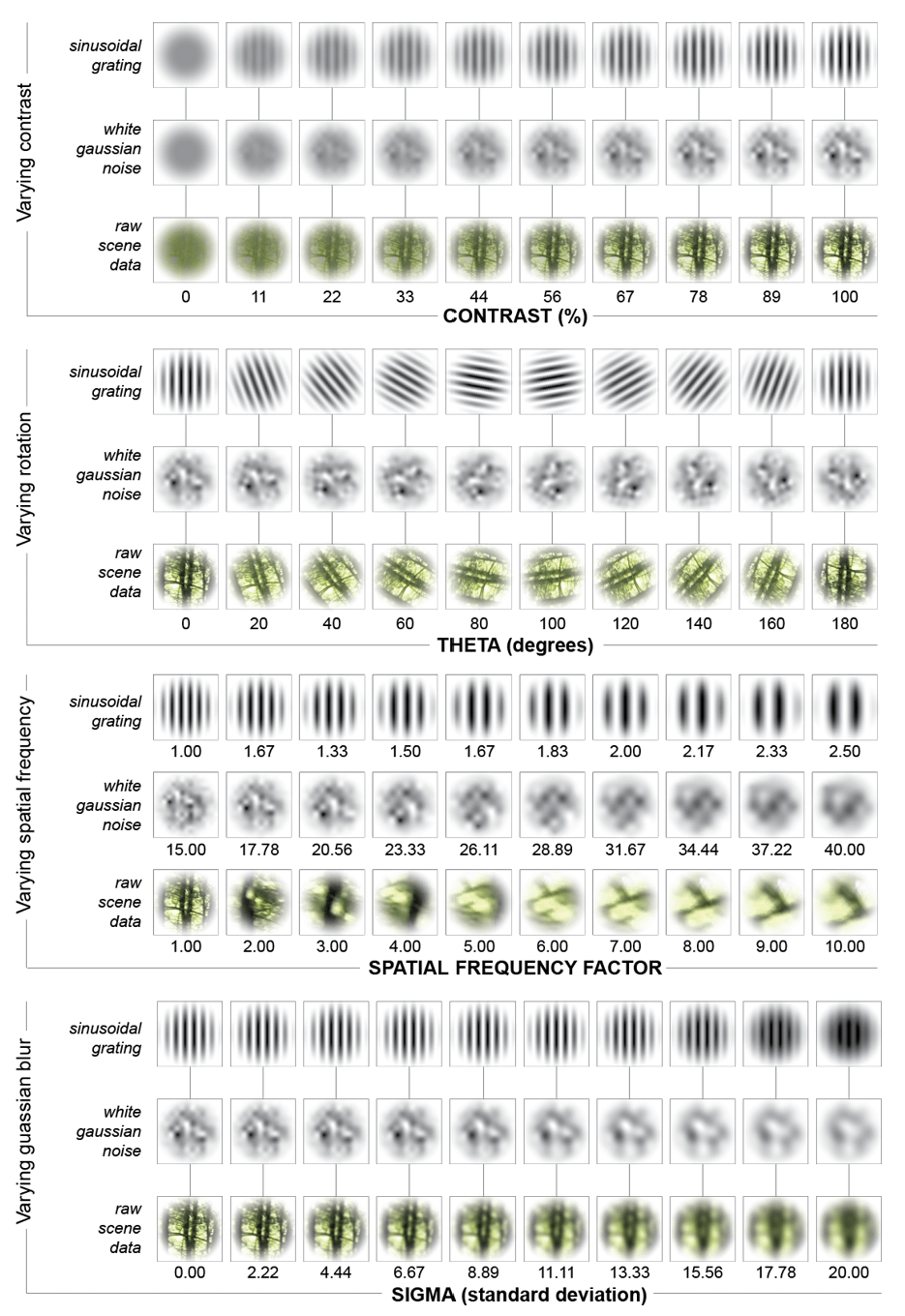

The source psychophysical structures are second-order Gabor patches: windowed Gaussian white noise blurred with a 2-D Gaussian smoothing kernel, σ = 5. Two potential methods of adaptation are highlighted here – overlay blending and 1:1 hue, saturation, value (HSV) adaptation. The former is a variation of element-wise multiplication of two source images resulting in linear interpolation of the top layer between the span of values in the base layer:

Alternatively, HSV adaptation transforms the source patch RGB matrix to HSV space and updates hue and saturation layers to match mean values from environmental footage.

Methodologies: optical flow

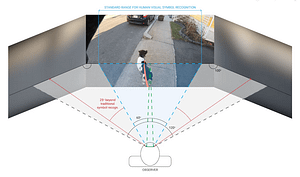

Integrating peripheral stimuli into real-world environments has many other exciting implications. Vection, or perceived self-motion through visual stimulus alone, is heavily influenced by peripheral cues and creates strong illusory effects for the observer. As a tangible example, discordance between visual and vestibular sensory input can result in kinetosis, or motion sickness. There is an exciting opportunity to deploy peripheral stimuli to alter vection dynamically in real-world scenarios, not only for motion sickness mitigation but also to augment perceived driving experience. In either case – for information delivery or for altering observer proprioception – an essential component of this systems approach is the adaptation of peripheral stimuli, enabling application of these methodologies outside of controlled lab environment .

Generalized process for stimulus adaptation is detailed below:

- Regions of interest (ROIs) are identified within the footage.

- Scene parameters of hue, saturation, value, spatial frequency, and optical flow are calculated within ROIs

- Codex block locations are identified within the simulated environment within the observer field of view.

- Apertures are generated (either traditional Gaussian windows or scene-adaptive, non-uniform envelopes, measured in arc min)

- Raw psychophysical carrier signals are generated.

- Stroke paths for stimulus motion are generated.

- Adapted codex blocks are generated from scene data and motion parameters and then integrated at defined carrier signal locations within the simulated environment.

Possible implementations of these methodologies would utilize powerful real-time video processing mechanisms to capture and parse environmental parameters. In the case of augmented driving experiences, increasingly sophisticated onboard processors developed for self-driving functions can be leveraged for this purpose. For future study design and experimentation, post-processing is practical for generating complete video sequences for presentation in a simulated environment.

Read next...

Excerpts: codex metrics, results in controlled environments

Metrics of symbol recognition speed and accuracy in a controlled environment, with codex parameterization using motion energy analysis

Excerpts: increasing environmental complexity, human subject trials

How detectable are far peripheral semantic cues in increasingly dynamic environments? Results show rapid adaptation and high detection accuracy.

Outfitting real-time cockpit environment with adaptive perceptual skins

Information floods the center of our visual field and often saturates the focus of our attention, yet there are parallel channels in the visual system