Dissertation: Biologically encoded augmented reality

This is a stick figure. The world we perceive around us is an incomplete image, of not

This is a stick figure. The world we perceive around us is an incomplete image, of not

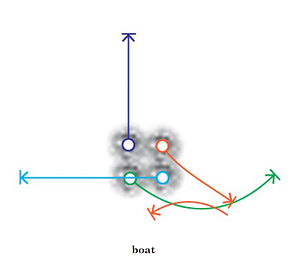

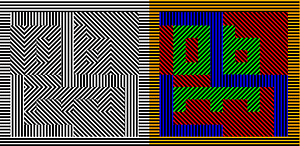

This research demonstrates a foundational approach to peripheral semantic information delivery capable of conveying highly complex symbols well beyond the established mean, using motion-modulated stimuli within a series of small, static apertures in far periphery ( > 50°).

How detectable are far peripheral semantic cues in increasingly dynamic environments? Results show rapid adaptation and high detection accuracy.

Metrics of symbol recognition speed and accuracy in a controlled environment, with codex parameterization using motion energy analysis

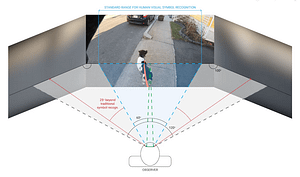

Adapting stimuli to dynamic environments One of the greatest challenges of adapting motion-modulated semantics to real-world environments

Information floods the center of our visual field and often saturates the focus of our attention, yet

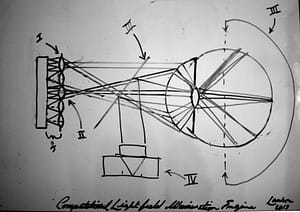

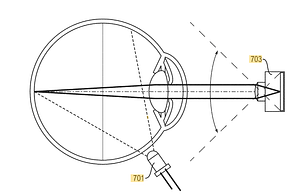

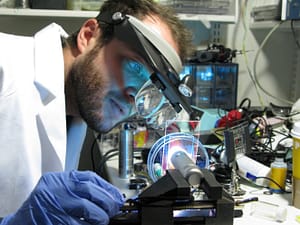

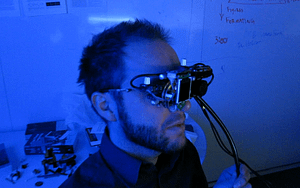

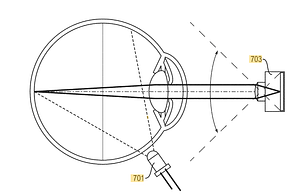

This system introduces ophthalmic light field capture, on a micro camera platform, allowing for a robust approach to the acquisition of multi-view imaging and ensuring nearly all light emitting from the pupil is gathered for processing.

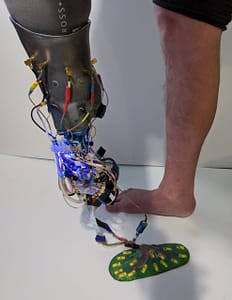

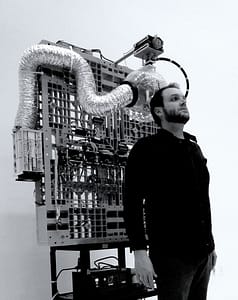

The development of bionic and cybernetic systems illuminates new potentials for the future of non-invasive, networked, neuro-muscular

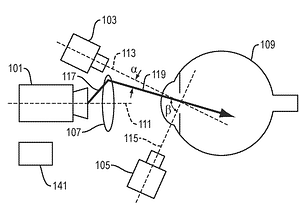

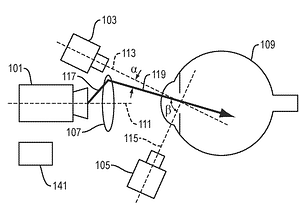

ABSTRACT: A projector and one or more optical components project a light pattern that scans at least

Our visual realities are flooded with complex and robust natural scenes. New findings in curvature estimation illustrate

We are surrounded by displays and technologies whose mechanisms are hidden from view. This work was an

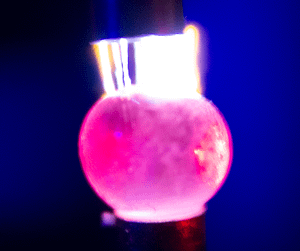

Spherical tissue deformation in electrostatic fields (NaCL+H20 suspension in hydrogel structure)

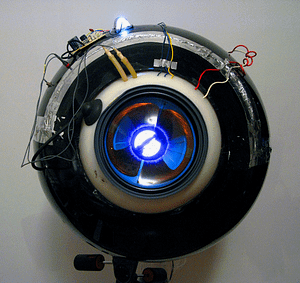

Neurovascular imaging interface, implementing coincident imaging/illumination path and through-pupil illumination.

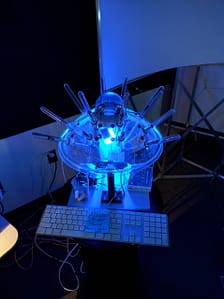

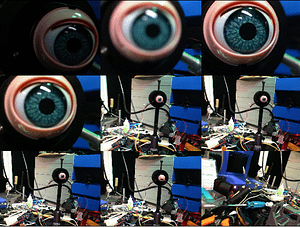

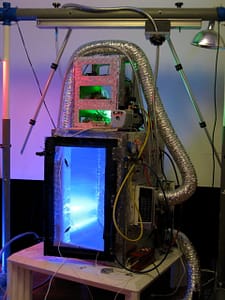

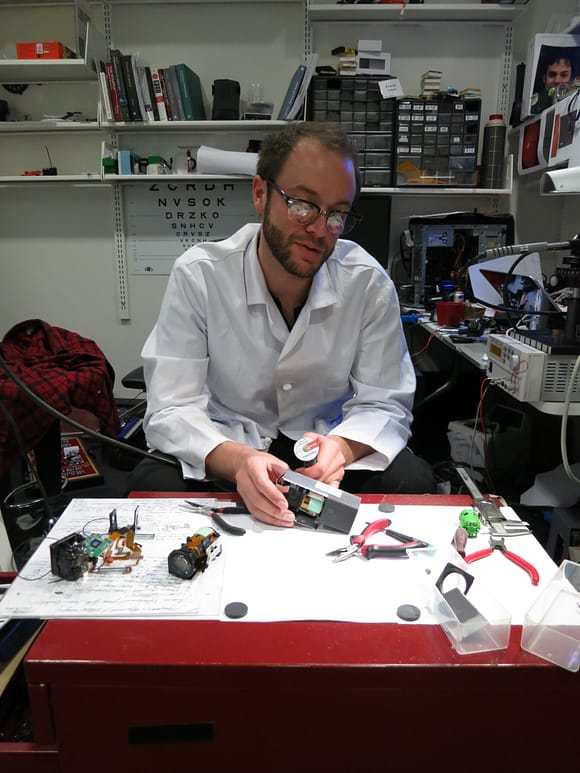

Biomimetic systems architecture engineering: prototypes Quantizing visual perception as an expression of experience is referentially viewed as

This self-directed, interactive device captures and visualizes images of the retina without the need for complex optics and precise alignment of illumination pathways.

The eye is a highly sensitive and programmable array of sensors. By presenting stimuli to discrete regions

The following sequence presents a demonstration of the interlinkages of visual and auditory sensory processing. The video

Indirect, diffuse illumination may be employed, rather than direct illumination of the retina through the pupil. For

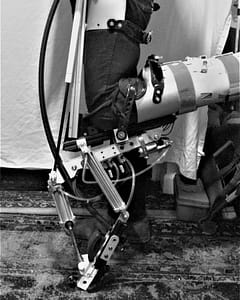

Angle, inertia, and velocity are a subset of moment arms that when instinctively activated are biologically hardcoded

This research investigates the viability of subsurface imaging of the teeth and gums as a possible metric

Publications pending Please log in to view protected content:

Proprioceptive feedback systems for kinetosis (motion sickness) treatment and prevention Rather than treating the environment, the automobile

Non-mydriatic fundus imaging on smart phone platforms may prove to be a paradigm shift in rapid screening devices with no operational expertise required

This system expands upon prior work by fully integrating the self-alignment architecture within the portable user interface.

I confront the filters of cognition as an intervention into the control I assume to have over

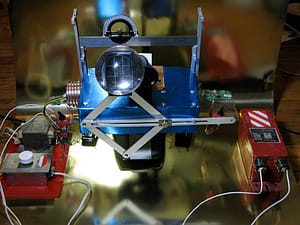

A cataract-affected eye scatters and refracts light before it reaches the retina, caused by a fogging or

Power lift prototype: compressor force-resistive foot feedback with on-board mulit-channel solenoid valve configuration [lift capacity = 600

The pain I had been experiencing for months following my reconstructive ankle surgery was not identifiable by

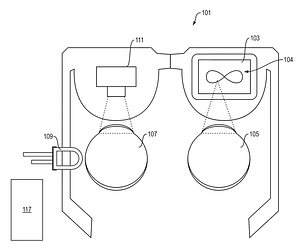

A low-cost, wearable solid-state device with no moving optical parts is articulated to create a full, 3D reconstruction of the anterior segment of the eye.

Building animatronic substrates for calibration and testing of bionic interfaces.

This invention comprises an apparatus for retinal self-imaging. Visual stimuli help the user self-align their eye with a camera. Bi-ocular coupling induces the test eye to rotate into different positions.

Model or manifest, the eye plays catalyst to the senses. For me, a gateway, separating the external

The advancement of exploratory mediums to form new modes of representation functions as one of the many

I engineered and built a 12 cubic foot vacuum particle accelerator with a power grid running at 300,000 V made from discarded television components, and a 10,000-watt stepped-up magnetron series. It is a photographic platform with which I have the freedom and flexibility to address a variety of natural mediums at the molecular level.

Through the use of microwave and radio transmissions, manipulated by musical compositions, I compose living organic matter orchestrated through the manipulation of frequency.

There is an inextricable link between the perspective of human engagement and the reaction of unseen forces.

The Art of Tone is a visual approach to the granular synthesis of sight and the very nature of particles specific to our perspective in space and time

I created a number of artificial retinas on which images can be focused to address iconic decay as an unperceived aspect of sight. These carefully arranged elements have been developed into a device which can only “see” afterimages, presenting an aesthetic world of imagery beyond our conscious view.

These pieces are single exposures made possible by the first digital camera I designed and built, which is nearly three feet across, makes an absolutely horrible noise, and has enough copper in it to make about 2000 pennies.

Thinking of the human visual system as a complex matrix of time-limited interactions, which are constantly decoding

Miner’s pick mattock, a differential joint from a piece of old farm equipment, a mining rail, and

Lawson, Matthew Everett. Biologically encoded augmented reality: multiplexing perceptual bandwidths. Diss. Massachusetts Institute of Technology, 2020.

Lawson, Matthew Everett, and Ramesh Raskar. “Methods and apparatus for retinal imaging.” U.S. Patent No. 9,295,388. 29 Mar. 2016.

In exemplary implementations, this invention comprises apparatus for retinal self-imaging. Visual stimuli help the user self-align his eye with a camera. Bi-ocular coupling induces the test eye to rotate into different positions. As the test eye rotates, a video is captured of different areas of the retina. Computational photography methods process this video into a mosaiced image of a large area of the retina. An LED is pressed against the skin near the eye, to provide indirect, diffuse illumination of the retina. The camera has a wide field of view, and can image part of the retina even when the eye is off-axis (when the eye’s pupillary axis and camera’s optical axis are not aligned). Alternately, the retina is illuminated directly through the pupil, and different parts of a large lens are used to image different parts of the retina. Alternately, a plenoptic camera is used for retinal imaging.

Lawson, Matthew Everett, et al. “Methods and apparatus for retinal imaging.” U.S. Patent No. 9,060,718. 23 Jun. 2015.

In exemplary implementations, this invention comprises apparatus for retinal self-imaging. Visual stimuli help the user self-align his eye with a camera. Bi-ocular coupling induces the test eye to rotate into different positions. As the test eye rotates, a video is captured of different areas of the retina. Computational photography methods process this video into a mosaiced image of a large area of the retina. An LED is pressed against the skin near the eye, to provide indirect, diffuse illumination of the retina. The camera has a wide field of view, and can image part of the retina even when the eye is off-axis (when the eye’s pupillary axis and camera’s optical axis are not aligned). Alternately, the retina is illuminated directly through the pupil, and different parts of a large lens are used to image different parts of the retina. Alternately, a plenoptic camera is used for retinal imaging.

Lawson, Everett, Jason Boggess, Siddharth Khullar, Alex Olwal, Gordon Wetzstein, and Ramesh Raskar. “Computational retinal imaging via binocular coupling and indirect illumination.” In ACM SIGGRAPH 2012 Talks, pp. 1-1. 2012.

The retina is a complex light-sensitive tissue that is an essential part of the human visual system. It is unique, as it can be optically observable with non-invasive methods through the eye’s transparent elements. This has inspired a long history of retinal imaging devices for examination of optical function [Van Trigt 1852; Yates 2011] and for diagnosis of many of the diseases that manifest in the retinal tissue, such as diabetic retinophathy, hypertension, HIV/AIDS related retinitis, and age-related macular degeneration. These conditions are some of leading causes of blindness, especially in the developing world, but can often be prevented if screened and diagnosed in early stages.

Velten, Andreas, Di Wu, Belen Masia, Adrian Jarabo, Christopher Barsi, Chinmaya Joshi, Everett Lawson, Moungi Bawendi, Diego Gutierrez, and Ramesh Raskar. “Imaging the propagation of light through scenes at picosecond resolution.” Communications of the ACM 59, no. 9 (2016): 79-86.

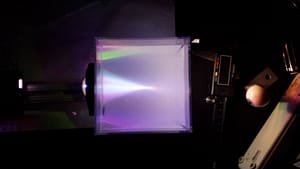

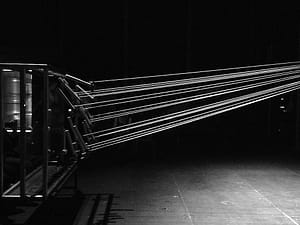

We present a novel imaging technique, which we call femto-photography, to capture and visualize the propagation of light through table-top scenes with an effective exposure time of 1.85 ps per frame. This is equivalent to a resolution of about one half trillion frames per second; between frames, light travels approximately just 0.5 mm. Since cameras with such extreme shutter speed obviously do not exist, we first re-purpose modern imaging hardware to record an ensemble average of repeatable events that are synchronized to a streak sensor, in which the time of arrival of light from the scene is coded in one of the sensor’s spatial dimensions. We then introduce reconstruction methods that allow us to visualize the propagation of femtosecond light pulses through the scenes. Given this fast resolution and the finite speed of light, we observe that the camera does not necessarily capture the events in the same order as …

Sinha, Shantanu, Nickolaos Savidis, Everett Lawson, and Ramesh Raskar. “Replacing the Slit Lamp with a Mobile Multi-Output Projector Device for Anterior Segment Imaging.” Investigative Ophthalmology & Visual Science 56, no. 7 (2015): 3162-3162.

Lawson, Matthew Everett. “A Priori vision: the transcendence of pre-ontological sight: the disparity of externalizing the internal architecture of creation.” MS Thesis, Massachusetts Institute of Technology, 2012.

The completion of any visual work is not an arrival, but furthered from the origin, the inner plane of perspective, which is so readily lent from the context of communicating the seemingly coded space from which I am inspired. The closest visual language within my …

matthew Lawson, Everett, and Ramesh Raskar. “Smart phone administered fundus imaging without additional imaging optics.” Investigative Ophthalmology & Visual Science 55, no. 13 (2014): 1609-1609.

Velten, Andreas, Di Wu, Adrian Jarabo, Belen Masia, Christopher Barsi, Chinmaya Joshi, Everett Lawson, Moungi Bawendi, Diego Gutierrez, and Ramesh Raskar. “Femto-photography: capturing and visualizing the propagation of light.” ACM Transactions on Graphics (ToG) 32, no. 4 (2013): 1-8.

We present femto-photography, a novel imaging technique to capture and visualize the propagation of light. With an effective exposure time of 1.85 picoseconds (ps) per frame, we reconstruct movies of ultrafast events at an equivalent resolution of about one half trillion frames per second. Because cameras with this shutter speed do not exist, we re-purpose modern imaging hardware to record an ensemble average of repeatable events that are synchronized to a streak sensor, in which the time of arrival of light from the scene is coded in one of the sensor’s spatial dimensions. We introduce reconstruction methods that allow us to visualize the propagation of femtosecond light pulses through macroscopic scenes; at such fast resolution, we must consider the notion of time-unwarping between the camera’s and the world’s space-time coordinate systems to take into account effects associated with the finite speed of light …

Velten, Andreas, Di Wu, Adrian Jarabo, Belen Masia, Christopher Barsi, Everett Lawson, Chinmaya Joshi, Diego Gutierrez, Moungi G. Bawendi, and Ramesh Raskar. “Relativistic ultrafast rendering using time-of-flight imaging.” In ACM SIGGRAPH 2012 Talks, pp. 1-1. 2012.

We capture ultrafast movies of light in motion and synthesize physically valid visualizations. The effective exposure time for each frame is under two picoseconds (ps). Capturing a 2D video with this time resolution is highly challenging, given the low signal-to-noise ratio (SNR) associated with ultrafast exposures, as well as the absence of 2D cameras that operate at this time scale. We re-purpose modern imaging hardware to record an average of ultrafast repeatable events that are synchronized to a streak tube, and we introduce reconstruction methods to visualize propagation of light pulses through macroscopic scenes.

Velten, Andreas, Everett Lawson, Andrew Bardagjy, Moungi Bawendi, and Ramesh Raskar. “Slow art with a trillion frames per second camera.” In ACM SIGGRAPH 2011 Talks, pp. 1-1. 2011.

How will the world look with a one trillion frame per second camera? Although such a camera does not exist today, we converted high end research equipment to produce conventional movies at 0.5 trillion (5· 10 11) frames per second, with light moving barely 0.6 mm in each frame. Our camera has the game changing ability to capture objects moving at the speed of light. Inspired by the classic high speed photography art of Harold Edgerton [Kayafas and Edgerton 1987] we use this camera to capture movies of several scenes.

Pamplona, V., E. Passos, Jan Zizka, M. Oliveira, Everett Lawson, Esteban Clua, and Ramesh Raskar. “Catra: cataract probe with a lightfield display and a snap-on eyepiece for mobile phones.” In Proc. SIGGRAPH, vol. 11, pp. 7-11. 2011.

Pamplona, Vitor F., Erick B. Passos, Jan Zizka, Manuel M. Oliveira, Everett Lawson, Esteban Clua, and Ramesh Raskar. “CATRA: interactive measuring and modeling of cataracts.” ACM Transactions on Graphics (TOG) 30, no. 4 (2011): 1-8.

We introduce an interactive method to assess cataracts in the human eye by crafting an optical solution that measures the perceptual impact of forward scattering on the foveal region. Current solutions rely on highly-trained clinicians to check the back scattering in the crystallin lens and test their predictions on visual acuity tests. Close-range parallax barriers create collimated beams of light to scan through sub-apertures, scattering light as it strikes a cataract. User feedback generates maps for opacity, attenuation, contrast and sub-aperture point-spread functions. The goal is to allow a general audience to operate a portable high-contrast light-field display to gain a meaningful understanding of their own visual conditions. User evaluations and validation with modified camera optics are performed. Compiled data is used to reconstruct the individual’s cataract-affected view, offering a novel approach for capturing …

Pandharkar, Rohit, Andreas Velten, Andrew Bardagjy, Everett Lawson, Moungi Bawendi, and Ramesh Raskar. “Estimating motion and size of moving non-line-of-sight objects in cluttered environments.” In CVPR 2011, pp. 265-272. IEEE, 2011.

e present a technique for motion and size estimation of non-line-of-sight (NLOS) moving objects in cluttered environments using a time of flight camera and multipath analysis. We exploit relative times of arrival after reflection from a grid of points on a diffuse surface and create a virtual phased-array. By subtracting space-time impulse responses for successive frames, we separate responses of NLOS moving objects from those resulting from the cluttered environment. After reconstructing the line-of-sight scene geometry, we analyze the space of wavefronts using the phased array and solve a constrained least squares problem to recover the NLOS target location. Importantly, we can recover target’s motion vector even in presence of uncalibrated time and pose bias common in time of flight systems. In addition, we compute the upper bound on the size of the target by backprojecting the extremas of the time profiles …

Kirmani, Ahmed, Andreas Velten, Tyler Hutchison, M. Everett Lawson, Vivek K. Goyal, M. Bawendi, and Ramesh Raskar. “Reconstructing an image on a hidden plane using ultrafast imaging of diffuse reflections.” Submitted, May (2011).

An ordinary camera cannot photograph an occluded scene without the aid of a mirror for lateral visibility. Here, we demonstrate the reconstruction of images on hidden planes that are occluded from both the light source and the camera sensor, using only a Lambertian (diffuse) surface as a substitute for a mirror. In our experiments, we illuminate a Lambertian diffuser using a femtosecond pulsed laser and time-sample the scattered light using a streak camera with picosecond temporal resolution. We recover the hidden image from the time-resolved data by solving a linear inversion problem. We develop a model for the time-dependent scattering of a light impulse from diffuse surfaces, which we use with ultra-fast illumination and time-resolved sensing to computationally reconstruct planar black and white patterns that cannot be recovered with conventional optical imaging methods.

ABSTRACT: A projector and one or more optical components project a light pattern that scans at least a portion of an anterior segment of an

Indirect, diffuse illumination may be employed, rather than direct illumination of the retina through the pupil. For indirect illumination, a cool-to-the-touch light source is pressed

© 2026 M. Everett Lawson